Technology

![]() See this visualization first on the Voronoi app.

See this visualization first on the Voronoi app.

The World’s Most Powerful AI Supercomputers

This was originally posted on our Voronoi app. Download the app for free on iOS or Android and discover incredible data-driven charts from a variety of trusted sources.

- xAI’s Colossus cluster in Memphis leads the world with an estimated 200,000 Nvidia H100 chip equivalents.

- Meta, Oracle, and Microsoft/OpenAI each operate compute clusters with 100,000 H100 equivalents.

- The cost and power demands of these systems are rising sharply, doubling in cost roughly every 13 months.

Today, the rapid rise of AI is fueling a new arms race for compute power.

Tech companies are rapidly building AI supercomputers, also known as GPU clusters or AI datacenters, by packing them with ever-growing numbers of chips for training advanced AI models. So far, the U.S. has taken the lead, as Big Tech firms pour billions into AI infrastructure to secure a competitive edge.

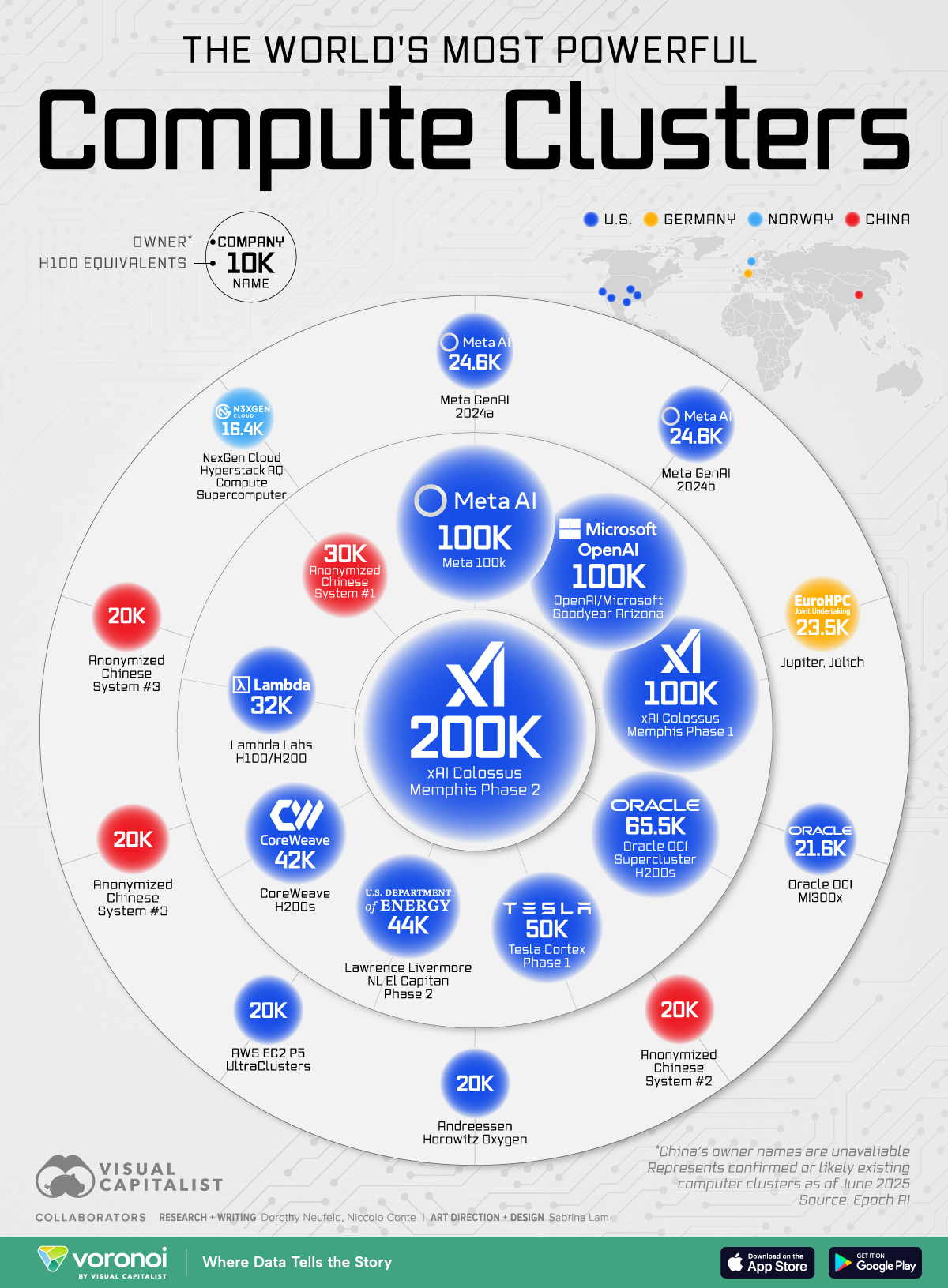

This graphic shows the world’s leading compute clusters, based on data from Epoch AI.

Ranked: The Top 20 Compute Clusters Globally

Below, we show the most powerful AI supercomputers in the world as of mid-2025:

| Compute Cluster | H100 Equivalents | Owner | Country | Certainty |

|---|---|---|---|---|

| xAI Colossus Memphis Phase 2 | 200000 | xAI | U.S. | Likely |

| Meta 100k | 100000 | Meta AI | U.S. | Likely |

| OpenAI/Microsoft Goodyear Arizona | 100000 | Microsoft, OpenAI | U.S. | Likely |

| xAI Colossus Memphis Phase 1 | 100000 | xAI | U.S. | Confirmed |

| Oracle OCI Supercluster H200s | 65536 | Oracle | U.S. | Likely |

| Tesla Cortex Phase 1 | 50000 | Tesla | U.S. | Confirmed |

| Lawrence Livermore NL El Capitan Phase 2 | 44143 | U.S. Department of Energy | U.S. | Confirmed |

| CoreWeave H200s | 42000 | CoreWeave | U.S. | Likely |

| Lambda Labs H100/H200 | 32000 | Lambda Labs | U.S. | Likely |

| Anonymized Chinese System | 30000 | N/A | China | Confirmed |

| Meta GenAI 2024a | 24576 | Meta AI | U.S. | Confirmed |

| Meta GenAI 2024b | 24576 | Meta AI | U.S. | Confirmed |

| Jupiter, Jülich | 23536 | EuroHPC JU, Jülich Supercomputing Center | Germany | Confirmed |

| Oracle OCI MI300x | 21649 | Oracle | U.S. | Likely |

| Anonymized Chinese System | 20000 | N/A | China | Confirmed |

| Andreessen Horowitz Oxygen | 20000 | Andreessen Horowitz | U.S. | Likely |

| AWS EC2 P5 UltraClusters | 20000 | Amazon | U.S. | Likely |

| Anonymized Chinese System | 20000 | N/A | China | Likely |

| Anonymized Chinese System | 20000 | N/A | China | Confirmed |

| NexGen Cloud Hyperstack AQ Compute Supercomputer | 16384 | NexGen Cloud | Norway | Likely |

xAI’s Colossus Memphis Phase 2 tops the chart with 200,000 H100 equivalents—twice the size of the next largest cluster.

Colossus delivers 20.6 on the base-10 scale for non-sparse operations—over 400 quintillion per second. That would be enough compute power to train OpenAI’s 2020 GPT-3’s full two-week cycle in under two hours.

Other major players are also scaling up. Meta’s 100K system, Microsoft/OpenAI’s Goodyear cluster, and Oracle’s H200-based machine follow, with GPU counts between 65,536 and 100,000.

Notably absent from public rankings are Google and Amazon, which have not disclosed as much information on their AI hardware builds.

Learn More on the Voronoi App

To learn more about this topic from a Big Tech perspective, check out this graphic on the rise of hyperscaler spending on AI.