California Bills on Social Media and AI Chatbots Fuel Privacy Fears

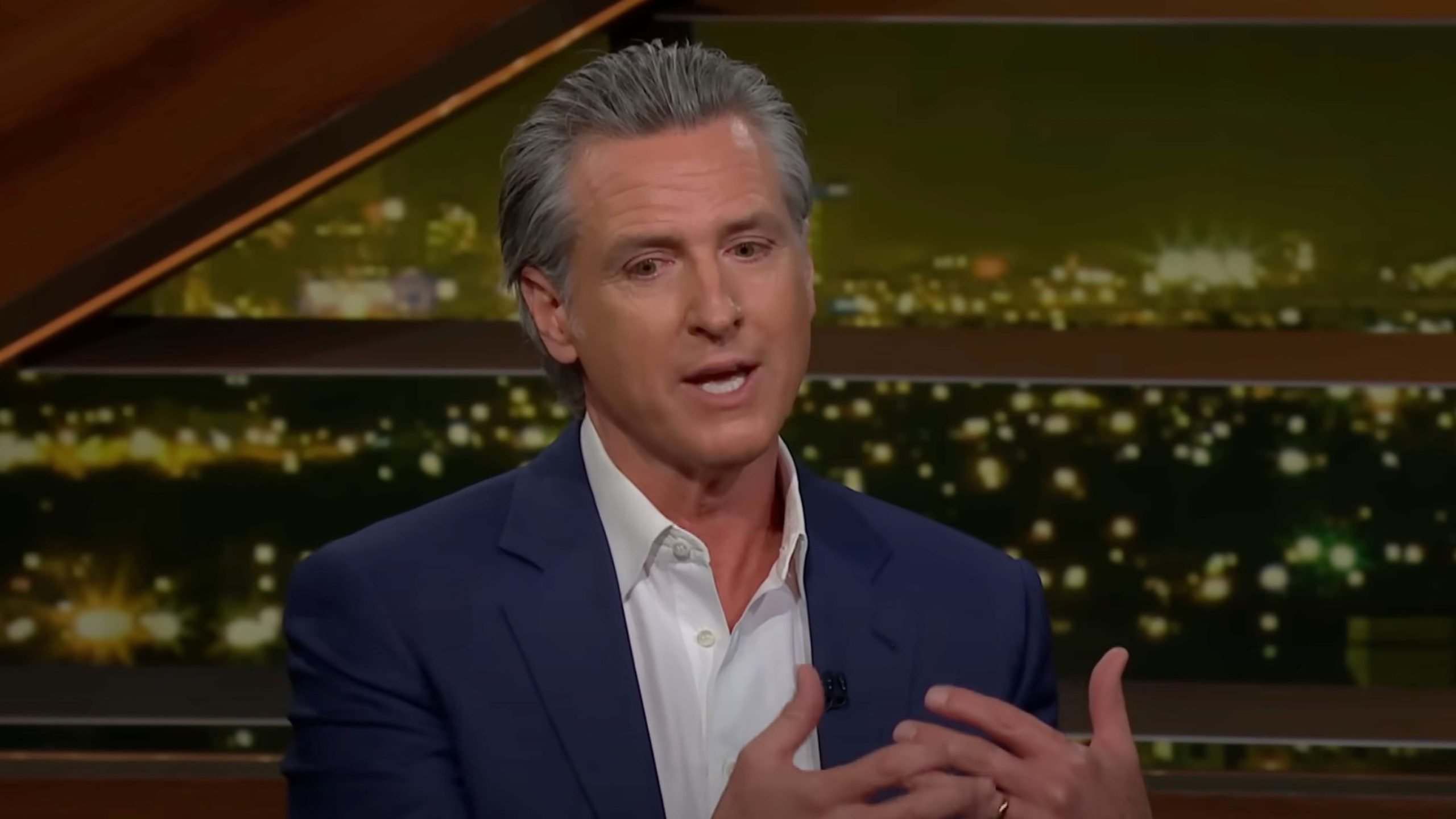

Two controversial tech-related bills have cleared the California legislature and now await decisions from Governor Gavin Newsom, setting the stage for a potentially significant change in how social media and AI chatbot platforms interact with their users.

Both proposals raise red flags among privacy advocates who warn they could normalize government-driven oversight of digital spaces.

The first, Assembly Bill 56, would require social media companies to display persistent mental health warnings to minors using their platforms.

Drawing from a 2023 US Surgeon General report, the legislation mandates that platforms such as Instagram, TikTok, and Snapchat show black-box warning labels about potential harm to youth mental health.

The alert would appear for ten seconds at login, again after three hours of use, and once every hour after that.

Supporters, including Assemblymember Rebecca Bauer-Kahan and Attorney General Rob Bonta, claim the bill is necessary to respond to what they describe as a youth mental health emergency.

Critics of the bill argue it inserts state messaging into private platforms in a way that undermines user autonomy and treats teens as passive recipients of technology, rather than individuals capable of making informed choices.

Newsom has until October 13 to sign or veto the measure.

The second proposal, Senate Bill 243, could significantly expand the surveillance of private conversations between users and AI-powered chatbots.

The legislation would require companies that operate so-called companion chatbots to detect and respond to signs of suicidal ideation, self-harm, or suicide mentioned by users.

These platforms would also be required to report data to the state’s Office of Suicide Prevention each year, including the number of instances in which users expressed suicidal thoughts and how often chatbots raised the issue themselves.

In practice, these requirements would force chatbot providers to monitor user interactions in real time.

This structure places emotional well-being in direct tension with user privacy, effectively institutionalizing a system in which companies must watch, analyze, and report on the most intimate conversations people might have with AI companions.

Attorney General Bonta added fuel to that campaign last year, stating, “Social media companies have demonstrated an unwillingness to tackle the mental health crisis, instead digging in deeper into harnessing addictive features and harmful content for the sake of profits.”

He emphasized that while warning labels alone are not enough, they represent a necessary part of the response.

Minnesota became the first state to pass a law of this kind earlier this year, applying its warning label rules to all users, not just minors.

New York Governor Kathy Hochul is currently considering a bill similar to California’s AB 56, signaling a broader trend toward aggressive regulation of social media platforms.

Supporters view these proposals as overdue corrections to an unchecked industry.

Privacy advocates argue they amount to state overreach that could entrench mass monitoring in environments that were once assumed to be private.

With Newsom’s decisions expected in the coming weeks, California is positioned to either double down on this surveillance-centered approach or push back on the steady expansion of state authority into digital communication.